Building a Secure Message Processing Infrastructure

My friend who works for a major financial firm, once threw me a question something like this:

"...The explosion of machine-generated data from sources like logs, networks and devices has led to an exponential increase in data volume and he would like to extract significant value from these real-time streaming-data. It seems as this data needs to be processed in-motion (or in-stream), security aspects become more challenging. He would like to design an architecture which may help to cover the aspects of Authentication, Authorization and Encryption of the streaming data and not compromising the aspects of speed and functionality..."

His requirement got me thinking. And I Googled with various combinations of 'streaming' and 'security' to see if there is a suitable project in the open-source domain. I was not quite satisfied. While some technologies (such as Spark) addressed it to a large extent, what he actually wanted was how to ensure a secure access to streaming data itself and not the applications which process the streaming data.

Well Streaming Data is essentially in the form of messages and applications (such as those build using Spark or Strom) which want to process the streaming data, need to access these messages from a Messaging Middleware or Messaging Fabric.

And to me it seemed securing this Message Fabric as a reasonable way to enforce security. Of course, this modifies the Messaging Middleware behavior and in a way we have to come up with a specialized version of the original Messaging Fabric software if necessary.

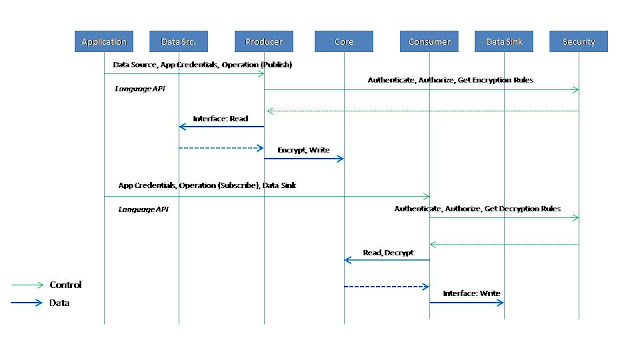

As such, I came up with the following high level architecture:

So, there is this Messaging Core a set of Producer and Consumer Layer (strictly based on applications which either produce data or consume data). The Applications themselves will hence act as either Source or Sink.

The Security feature interacts with the Producer and Consumer Layers which are in direct interface with the Application. What it means, the Applications as then invoke APIs related to Producing Messages or Consuming the same, they get encountered with the Security enforcement. Seemed pretty neat!

It does not impact the messaging core, which will be plain vanilla and perform at the original speeds.

The key points of this approach are as follows:

- Needs to be scalable in terms of large number of producers and consumers

- Can follow a publish subscribe model

- Should be lightweight, involve only in the core task of message caching and movement

- In memory caching with batched persistency to node local disks for resilience

- Messages may not be stored ‘for ever’ (cache eviction)

- Many to Many messaging should be possible

- Every application accessing the Messaging Fabric through Producer/Consumer Layer authentication and authorization rules and encryption/decryption keys are consulted at the Security Layer

- Security management (configuration of rules and keys) is done externally

3. Security Nature of the parts of the Data Pipeline are as specified below:

- Application -> Producer/Consumer (Encrypted, Authenticated, Authorized)

- Producer -> Core (Encrypted)

- Core -> Consumer (Encrypted)

and finally the physical architecture is as shown in the below diagram.

It may be noted that following two points appeared very critical in determining which set of technologies would be suitable to build such as system.

- Pushing data from producers to consumers in a secure manner is the main target here. Currently any consumer can access any topics, Producers can write any topic

- Message Queues like JMS (ActiveMQ et. al.), do not support authorization based on operations and implicit (application un-assisted) encryption of data stream. Only basic credentials-based authentication is possible

- Kafka is lightweight and proven for large scale publish-subscribe messaging using producer/consumer paradigm

- Kafka currently does not support Security, so the security layer needs to be built separately and integrated with Kafka Producer/Consumer framework

- Security Policies (A&A and Encryption) are configured on the Security Server using Web Client

- Sources and Sinks will be abstracted as interfaces. Back-end may be

- Internal Queues/Buffers (message to be contained within the applications)

- External Applications

- Other such as DBs, Files etc.

- Applications (Producer side or Consumer side) can continue to use their own engines like Spark Streaming/Storm to process the data

The overall solution which I have just outlined as above, seemed pretty much acceptable to my friend and he acknowledged the need of building a separate external pluggable security module. Kafka as a core messaging fabric has been a smart choice anyhow considering his application needs.

Comments

Post a Comment